OpenAI ARR hit $20B, “AI Calendly” Hit $1M ARR in 3.5 Months

Runpod ARR Hit $120M

Runpod ARR Hit $120M

Runpod is an AI-focused cloud platform designed to host, deploy, and scale AI applications with an emphasis on developer experience and flexible GPU/CPU infrastructure.

Born out of a hobby project by former Comcast developers Zhen Lu and Pardeep Singh, the company tackled the fragmented GPU tooling landscape by repurposing Ethereum mining rigs into GPU servers that could run AI workloads.

The platform’s core value proposition lies in solving key challenges for AI developers: simplifying access to high-performance GPU/CPU resources, automating scaling through serverless compute, and integrating developer tools that streamline workflows from experiment to production.

After bootstrapping to over $1 million in early revenue, the company secured a $20 million seed funding round in May 2024 co-led by Dell Technologies Capital and Intel Capital with participation from angel investors such as Hugging Face co-founder Julien Chaumond and Nat Friedman.

That investment underpinned expansion into enterprise segments and global infrastructure, culminating in a $120 million ARR milestone reported in January 2026. With this run rate and half a million active developers, Runpod is now preparing for a potential Series A to further scale its AI-native cloud offerings.

OpenAI ARR Hit $20B

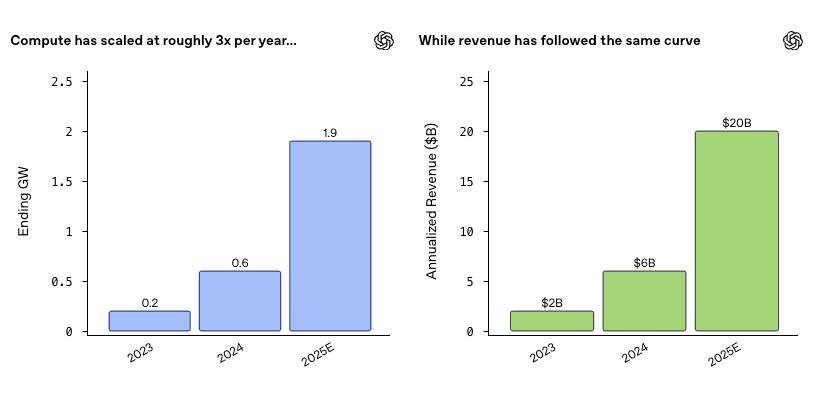

Despite recent skepticism sparked by the rapid advances of Google Gemini and Anthropic, OpenAI today disclosed fresh revenue numbers: annual recurring revenue has now surpassed $20 billion. That’s a tenfold increase in just two years—from roughly $2 billion in 2023 to $20 billion in 2025.

Compute consumption has scaled at a similar pace. OpenAI’s infrastructure demand grew from about 0.2 GW in 2023 to 1.9 GW in 2025. On the business model side, CFO Sarah Friar reiterated a core principle: OpenAI’s business model should scale with the value created by intelligence.

Today, OpenAI operates a multi-layered monetization stack: individual and team subscriptions, usage-based APIs, and newly introduced advertising and commercial support tiers—where relevant options are surfaced when AI assists users in making decisions.

As intelligence increasingly penetrates scientific research, drug discovery, energy systems, and financial modeling, entirely new economic models are emerging. Licensing, IP-based agreements, and outcome-based pricing will define how value is shared. This is how the internet evolved—and AI is likely to follow the same path.

Against this backdrop, OpenAI plans to introduce outcome-based pricing and IP licensing models. This closely mirrors the core thesis I wrote about yesterday regarding AI data businesses.

From Tools to Infrastructure—and Then to Agents

When asked about strategic priorities, Sarah Friar highlighted several key shifts.

First, from tools to infrastructure. ChatGPT has moved beyond being a curiosity-driven product to becoming daily infrastructure—helping users navigate health, financial, and complex decision-making workflows.

Second, compute resource management. OpenAI is diversifying its supplier ecosystem to avoid single-source dependency and dynamically allocating either high-performance or low-cost hardware depending on task requirements.

Looking ahead to 2026, the central focus is practical application. OpenAI aims to close the gap between AI’s theoretical potential and real-world deployment—particularly in healthcare, science, and enterprise services. The next phase centers on AI agents capable of taking actions across tools, maintaining long-term context, and orchestrating workflows end to end.

This closely aligns with what Sequoia has called one of the most important trends today: long-horizon agents. These agents share two defining characteristics. First, they shift AI from being a “speaker” to an “actor.” Second, their ability to complete long, multi-step tasks is improving exponentially.

Sequoia partner Sonya Huang made a point yesterday that I found especially compelling:

We’re moving from an era of product-led growth to one of agent-led growth.

Product-led growth rests on a core assumption—that humans try software. Since 2010, nearly everything in SaaS has been optimized for discovery: landing pages, free trials, and onboarding flows. But if agents are the ones choosing software, most of that becomes irrelevant.

The new PLG funnel is no longer landing page → free trial → activation → conversion. Instead, it looks like agent query → documentation scan → capability matching → recommendation. That implies a completely different kind of moat.

You don’t need the best onboarding—you need the best documentation. You don’t need viral loops—you need structured data agents can parse. You don’t need a beautiful UI on first use—you need APIs agents can actually call.

Agents have no loyalty and no switching costs. They simply choose what works best. In Sonya’s words, the entire distribution layer is being rewritten.

An “AI Calendly” hit $1M ARR in 3.5 Months

Around the same time, I came across a product using AI to handle scheduling and time-off requests—essentially an AI-era version of Calendly—that crossed $1M ARR in just over three months.

Conceptually, it resembles Calendly, but in practice, it’s fundamentally different.