Vibe ARR hit $100M, Chinese AI Labs are Betting on Anthropic's Playbook

Oura is hitting $1B Revenue in 2025

👋 Hey, John here. Welcome to The Signal. Today, my eyes focus on the 3 topics:

The smart ring Oura is hitting $1B revenue in 2025.

An unknown startup vibe.co hit $100M ARR.

Chinese AI is betting on Anthropic's Playbook.

Oura is expected to hit $1B ARR in 2025

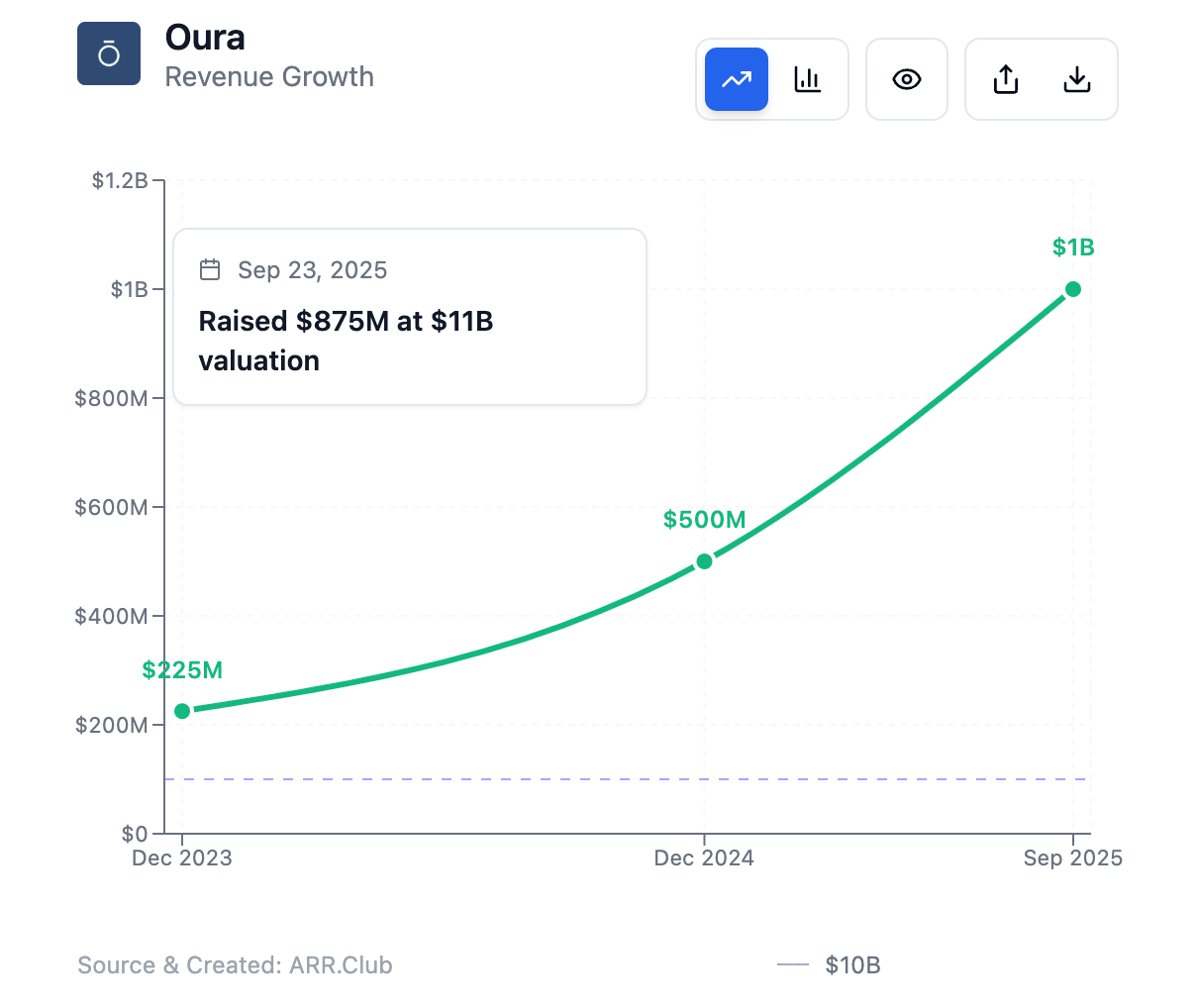

Oura, the Finnish health-tech company best known for its smart ring, is expected to generate more than $1 billion in revenue this year, doubling the $500 million it made in 2024. As for 2026, the company forecasts sales to exceed $1.5 billion.

While revenue is not always strictly equal to ARR, in Oura’s case the recurring membership/subscription component (for ring users) contributes significantly to its revenue, making the revenue figure a very strong proxy for its ARR.

Oura’s product blends high-precision hardware (the ring) that tracks sleep, readiness, activity, and other biometric signals with a subscription model for enhanced insights and analysis.

The hardware (which comprises ~80% of revenue) is complemented by a growing base of paying subscribers (about 2 million users in 2024) each paying ~$6/month. This hybrid model solves the challenge many wearable businesses face: balancing one-time hardware sales with recurring software revenue to improve predictability and margin.

Oura has sold 5.5 million rings to date, a notable increase from the 2.5 million reported in June 2024. The company has also raised significant funding (its Series D round was $200 million, valuing the company at about $5.2 billion as of late 2024, and is raising $875M Series E, bringing valuation to $11B.

What Oura stands out is the trend of Hardware + Subscriptions:

Vibe.co ARR hit $100M

Vibe.co is a performance-advertising platform focused on Streaming TV Ads, has reached $100M ARR just two years after its launch.

This makes it one of the fastest-growing software companies ever. The company supports over 10,000 performance marketers, has access to 120 million households, powers advertising through 500+ apps & channels, and integrates with 40+ marketing tools to streamline campaign workflows.

At its core, Vibe.co solves the challenge of reliable attribution, transparency, and real-time optimization in an ecosystem (CTV / streaming) that’s traditionally suffered from fragmented data, delayed feedback, and limited visibility.

Unlike many competitors, Vibe.co emphasizes verified and trustable data points, enabling brands to track outcomes with confidence—and feed those outcomes back to improve campaign performance while minimizing waste.

Vibe.co has shown strong scalability: tens of thousands of users, major reach (120M households), large inventory (500+ channels), and broad integrations, signifying institutional traction in performance marketing.

Its revenue growth reflects value delivered to brands via ROI-driven campaign performance: customers are seeing uplift in purchases, high return on ad spend (ROAS), and greater campaign certainty.

Chinese AI Labs are Betting on Anthropic's Playbook

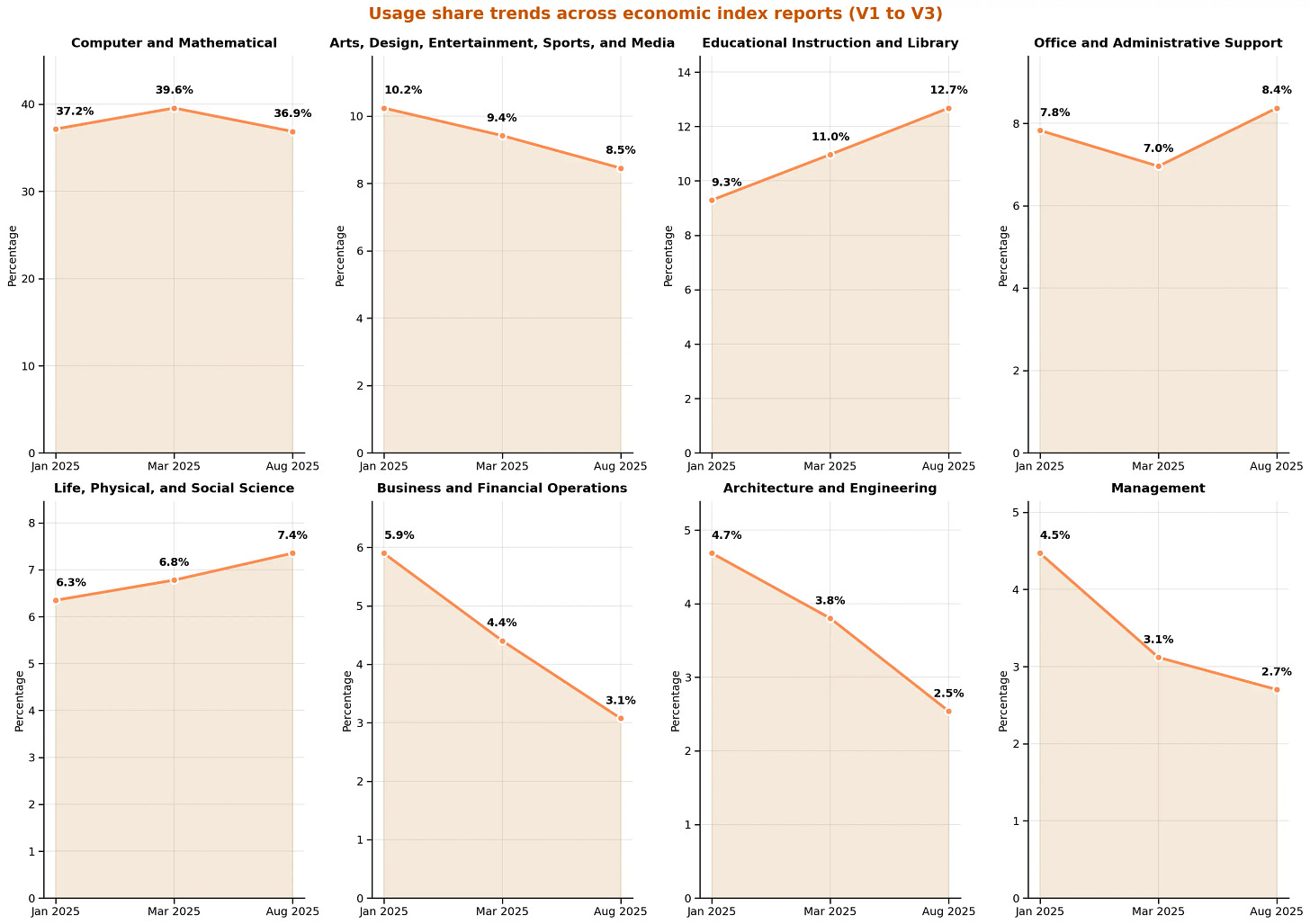

Last week, both OpenAI and Anthropic released reports on how people are using their AI models.

One thing that really stood out to me was the stark difference in user behavior: about 73% of ChatGPT usage is now for non-work-related messages, while Claude is used almost entirely for work purposes—more as a tool, assistant, or collaborator, especially in programming and enhancing human capabilities.

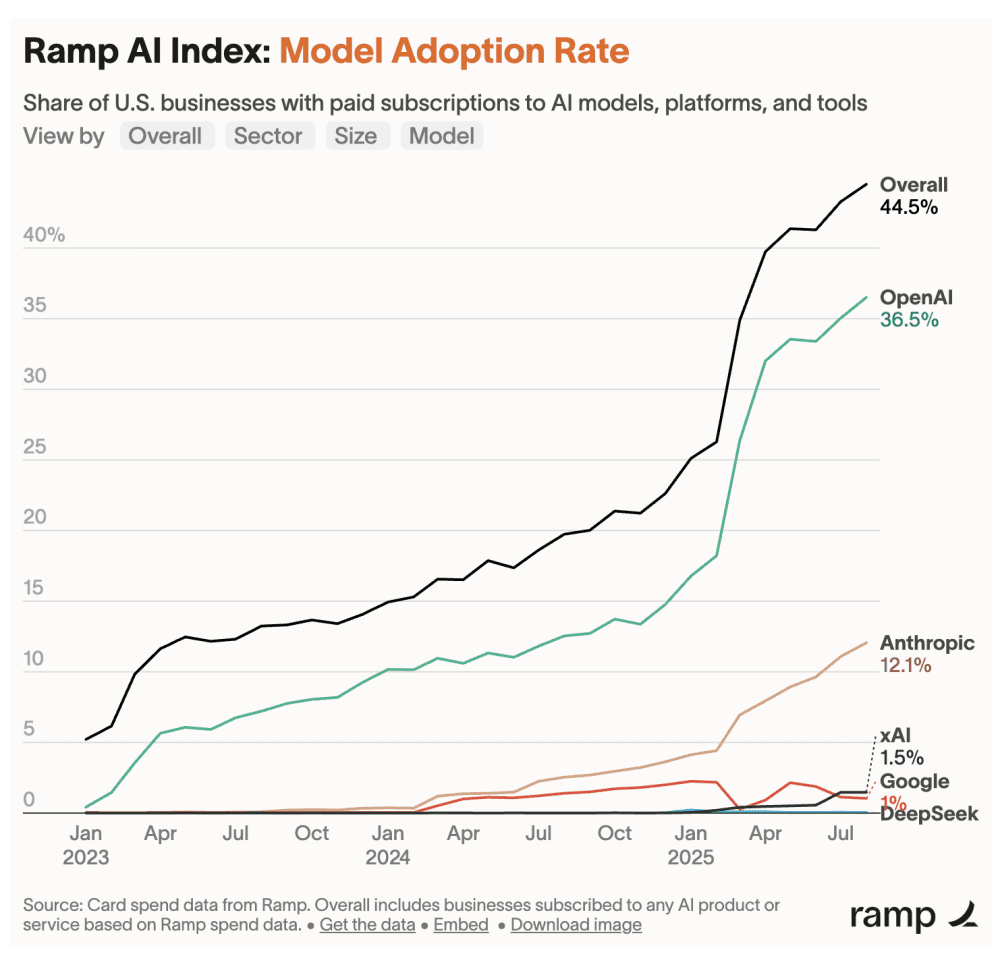

The two companies have carved out distinctly different paths: OpenAI continues to advance general-purpose capabilities, excelling in reasoning and multimodal tasks, while Anthropic has built a reputation around coding and tool use, gradually shaping itself into a go-to solution for real-world software engineering.

OpenAI is now valued at $300B, while Anthropic reached $183B. Pretty wild numbers.

But here’s something less obvious for the Chinese AI and maybe other AI Labs:

Being Anthropic might be harder than chasing OpenAI

Anthropic’s rapid rise has been fueled by its focus on coding and agent capabilities. It now dominates the emerging space of agentic coding, and its product Claude Code has become the fastest-growing offering in that category—reaching $400 million in ARR within just six months. After Claude 4 launched, revenue grew fivefold in two months.

I was recently talking with friends about the future of AI in China, and we touched on a counterintuitive point: many assume catching up to OpenAI is harder than matching Anthropic, since the former requires excellence across the board, while the latter calls for focused breakthroughs.

But in reality, becoming “the Anthropic” might be even more difficult. As followers, it’s easy to get trapped in the technical roadmap set by the leader—OpenAI—and simply play catch-up. It takes courage to propose and validate a different path. That’s how Anthropic did it.

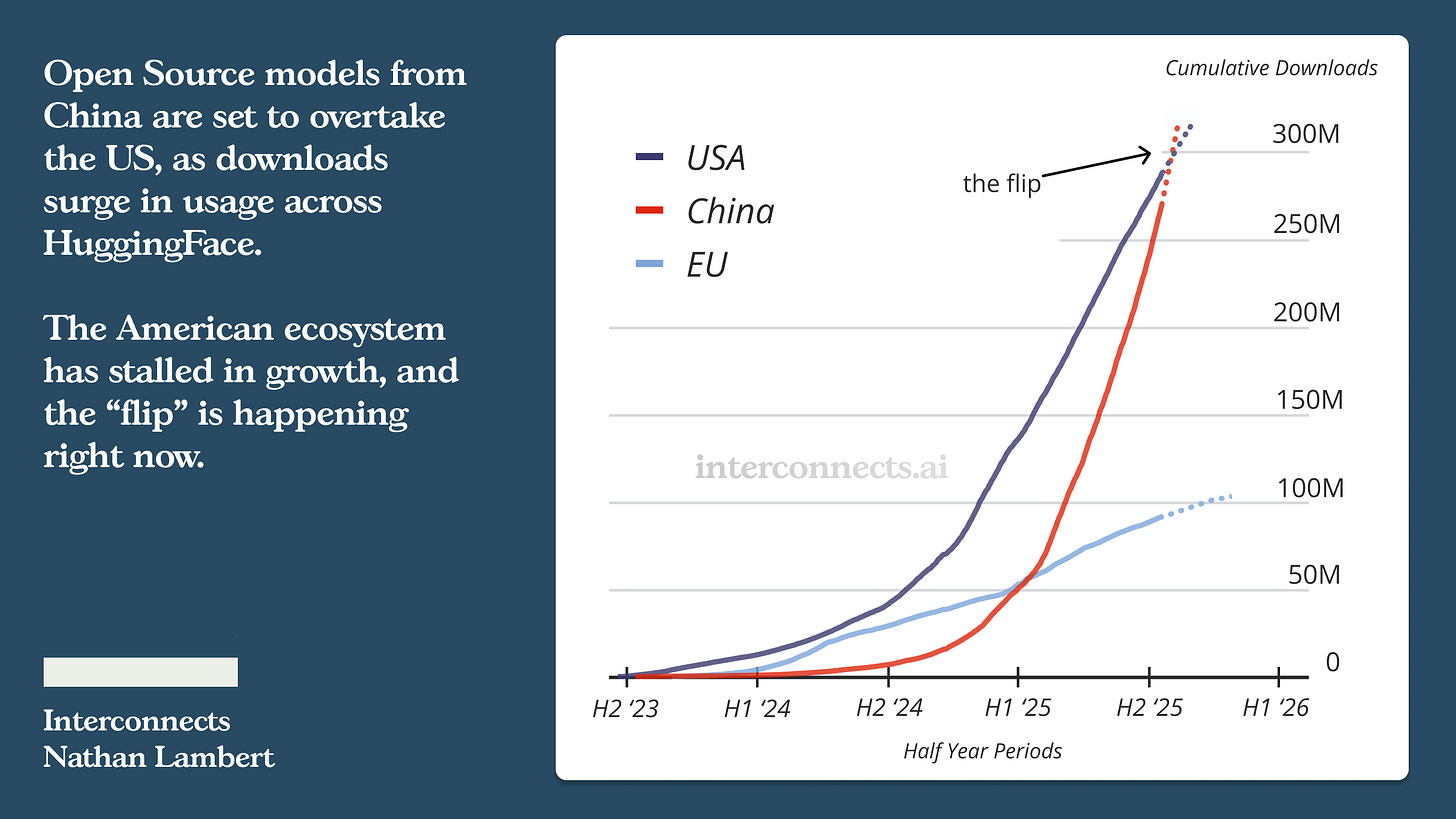

This may be why it’s only in recent months that Chinese AI companies have begun to realize that OpenAI’s approach isn’t the only valid one—and are now racing to catch up with Anthropic instead.

In early July, Moonshot AI released Kimi K2, with a technical blog titled Open Agentic Intelligence. Its official documentation included a compatibility guide allowing users to directly integrate K2 within Claude Code.

At the end of July, Alibaba’s Qwen3-Coder and Zhipu’s GLM-4.5 each made moves positioning themselves as potential alternatives to Claude Code.

In late August, DeepSeek launched DeepSeek-V3.1—which included Anthropic API compatibility, allowing developers to call its models in a similar way to how they use Claude.

And in early September, after Anthropic restricted access from Chinese companies, domestic AI players quickly responded, hoping to offer a viable replacement.

So how did Anthropic successfully challenge the technical trajectory that OpenAI had laid out? The answer goes back more than a year.

Anthropic’s Bet

When Anthropic released the Claude 3 model family in March 2024, it was still largely following OpenAI’s lead—emphasizing long context and general capabilities, much like its competitor.

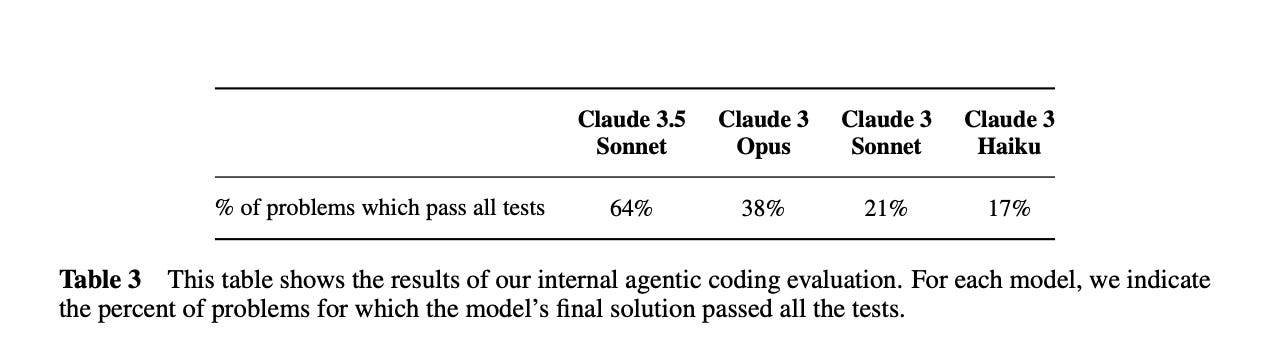

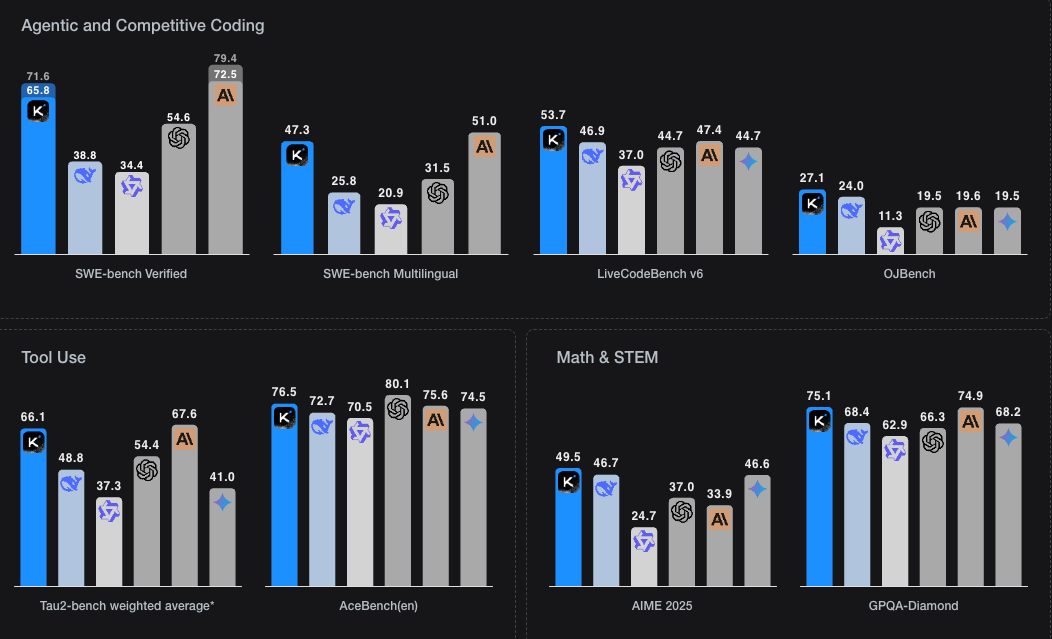

But three months later, the turning point came. With Claude 3.5 Sonnet in June 2024, Anthropic introduced its internal Agentic Coding benchmark, highlighting the model’s ability to fix bugs and add features in real codebases.

They emphasized how, “when given instructions and tools, Sonnet 3.5 can independently write, edit, and run code with sophisticated reasoning and troubleshooting. It easily handles code transitions, making it particularly effective at updating legacy applications and migrating codebases.”

In October 2024, an updated Claude 3.5 Sonnet (yes, the naming was messy—they seem to have learned since then) introduced Computer Use, elevating the model’s tool-use capabilities to a new level. The focus shifted entirely to “industry-leading software engineering capabilities.”

When OpenAI released the swe-bench Verified benchmark in August, it became a key showcase for Anthropic—almost like how Google’s Transformer paper once enabled OpenAI’s GPT models. Open research accelerates the whole field.

That November, Anthropic launched the Model Context Protocol (MCP), enabling scalable tool use for models. It soon became a de facto standard—Google and OpenAI announced compatibility, and many vendors released their own MCP tools.

Subsequent releases—Claude 3.7, 4.0, 4.1—and the rise of coding tools like Cursor based on Claude models cemented its role. Then came Claude Code, which put agentic coding front and center.

In time, Anthropic made OpenAI the follower in agentic coding—and pushed its own valuation toward $200 billion.

Escaping OpenAI’s Roadmap

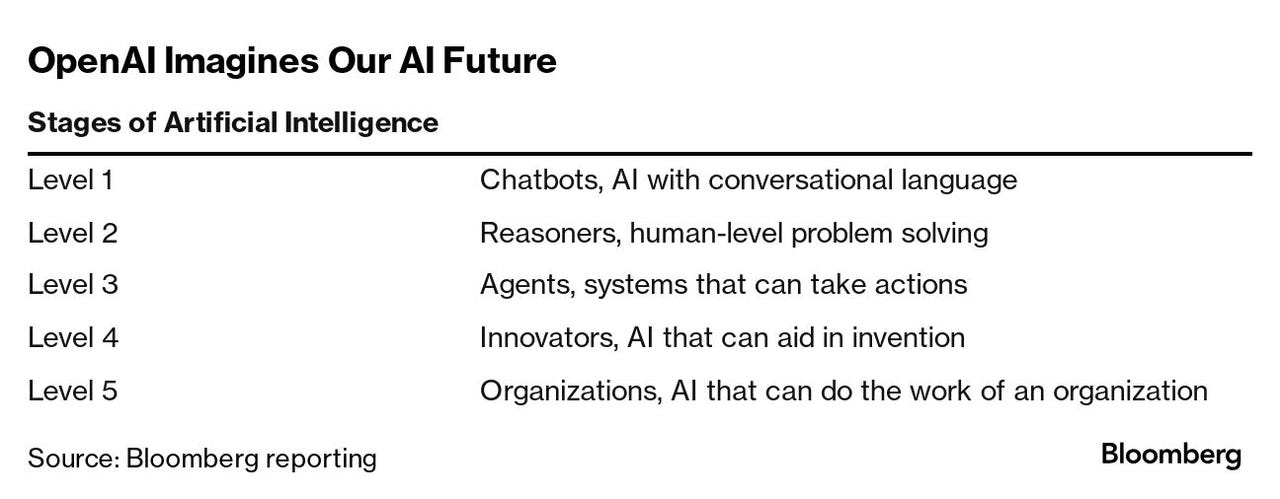

Anthropic found a way out—but many others in AI remained stuck inside OpenAI’s roadmap. When DeepSeek R1 arrived, it reinforced a common belief: you must master L2 “deep reasoning” before entering L3 agentic territory.

In a recent interview, Yang Zhilin, founder of Moonshot (maker of Kimi), repeatedly referenced Anthropic and Claude. He pointed out that Anthropic didn’t rigidly follow OpenAI’s L1-to-L5 progression—it didn’t over-invest in L2 reasoning, and instead jumped ahead to L3 agency. That, he suggested, was key to its breakthrough.

It might also be why Kimi’s K2 model prioritizes agentic over long-chain reasoning.

It’s easier to follow the leader. If a Chinese company’s strategy is to follow OpenAI, it will almost certainly remain trapped in its roadmap—always half a step (or a full step) behind in agents. And given that funding is limited for most AI startups in China, there aren’t that many shots on goal. Falling behind early might mean falling out for good.

It reminds me of something Zhang Yiming (founder of ByteDance) once said: Your understanding of a matter is your competitiveness in that matter—because, in theory, all other factors of production can be acquired. A blunter version: other than cognition, everything else can be built.

To avoid being trapped in someone else’s path, China’s AI industry needs more deep thinkers like Liang Wenfeng (Founder of DeepSeek) and Yang Zhilin (Founder of Moonshot). Think DeepSeek’s explorations in MoE, or Kimi’s work on next-gen deep learning optimizers.

Moonshot’s Kimi first became known for long-context capabilities. At launch in October 2023, it supported 200,000-character inputs—double Claude’s context at the time.

Yang even argued that “Lossless Long Context is Everything”— echoing his earlier academic work on Transformer-XL, which aimed to support longer contexts at the foundational level.

In January 2025, Kimi released a “long reasoning” model, K1.5, on the same day as DeepSeek R1—but it didn’t make much noise. Then in July, it open-sourced the trillion-parameter Kimi K2, which won broad recognition from the tech community—even Nature called it “China’s DeepSeek moment.”

When asked if Kimi wanted to become the Anthropic of China, Yang replied: “The idea of ‘becoming China’s XXX’ itself doesn’t hold—it’s hard to define things that way. The context and soil in China and the U.S. are different. Today we think more from a global perspective.”

For Chinese AI companies, the biggest value of Anthropic may be that it inspires more people to not just follow, but to find their own way.

But Maybe Anthropic Isn’t the Final Answer Either

From OpenAI’s perspective, Anthropic’s success looks like a flank attack—it got hit in an area it hadn’t prioritized.

OpenAI’s response was GPT-5-Codex, a model optimized for software development, along with the Codex CLI tool. Based on developer reactions, the experience differs from Claude’s: GPT-5-Codex leans toward thinking deeply before acting, while Claude favors acting while reasoning. For many complex tasks, thinking first may be the better choice.

So Claude may not be the final answer in software engineering, either. For AI companies in China, followers without ambition will always remain followers.