$10M ARR in 3 Months, No Funding| Xiankun Wu(Kuse)

Rebuilding Notion with a NotebookLM Mindset

Most AI products today start with a chat box.

You type a prompt. You get an answer. The interaction ends.

Kuse does the opposite and reached nearly $10M in ARR within three months, without raising any outside funding.

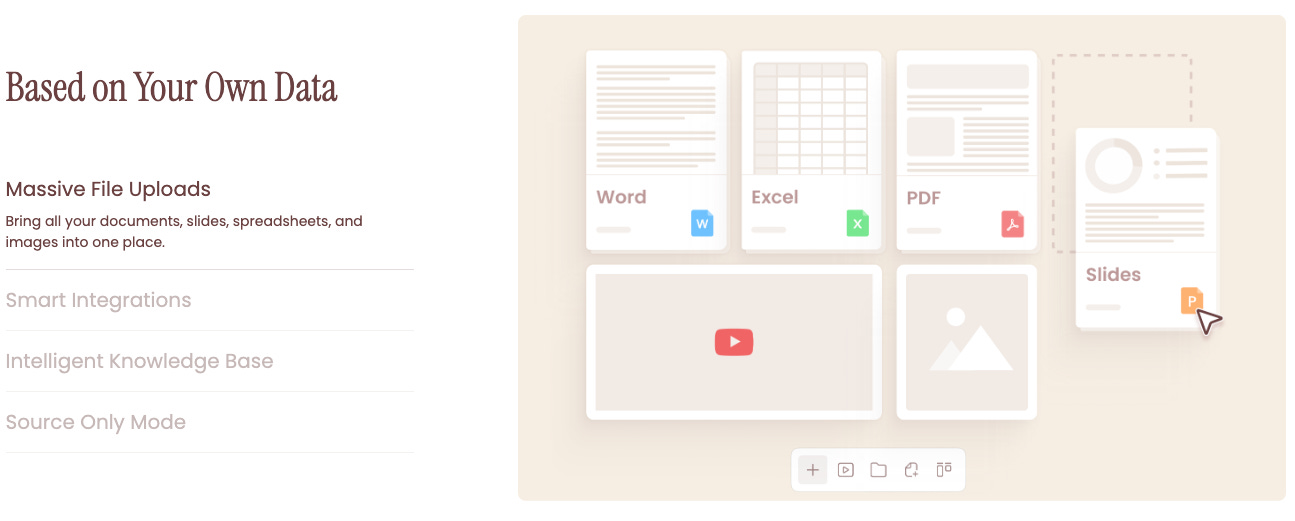

Instead of asking users what they want to generate, Kuse asks them to upload what they already have: documents, PDFs, research notes, internal files, messy information of all kinds. Only then does AI step in.

That single design decision changes everything.

I’ve spoken with the Kuse’s founding team several times over the past months. For a long time, I struggled to describe what the product really was. It looks like a mix of NotebookLM, Notion, and general AI agents — yet it behaves very differently.

Kuse isn’t built for one-shot generation. It’s built for context that compounds over time.

From One-Shot Generation to Compounding Context

Most AI agents today follow a one-shot generation model. You type a prompt, get an output, and the interaction ends. The context is consumed once and then discarded.

This works well for quick questions or isolated tasks. It breaks down in real knowledge work — where information is messy, cumulative, and reused over time.

Kuse takes a fundamentally different approach: long-term asset accumulation.

Like NotebookLM, it doesn’t start with a chat box. Users upload files and information sources first. AI processing comes later.

But unlike NotebookLM’s focus on personal learning, Kuse is designed for knowledge workers and enterprise workflows, where consistency, reuse, and context matter far more than one-off answers.

Context First

Kuse’s core philosophy is simple: Context First.

Documents, images, audio, and web pages are not treated as disposable prompts. They become persistent context assets, organized into folders and sources that form a living knowledge base.

With version 2.0, the team repositioned Kuse from a general AI tool into an AI-native, context-first system for file management and asset accumulation.

Instead of the traditional “document equals page” model, Kuse adopts a Finder-like structure, similar to macOS. Combined with AI-native workflows, this creates a closed loop:

Input → Generation → Accumulation → Reuse.

Chaos In, Genius Out

Kuse summarizes its approach as “Chaos in, Genius out.”

Messy, unstructured inputs go in. Clean, structured documents and web pages come out.

This marks a shift from “chat as production” to context-driven generation with compounding returns.

Kuse deliberately avoids becoming an app-building or vibe-coding platform. Its focus is narrow but powerful: turning complex information into clear, well-formatted, consumable outputs.

For free readers:

The rest of this piece dives into how Kuse found product–market fit, why formatting — not model intelligence — became its real moat, and how a team of fewer than 20 people scaled to nearly $10M in ARR in just three months.